Issue:

Unable to connect to the remote server at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.ExecuteSync[T](RESTCommand`1 cmd, IRetryPolicy policy, OperationContext operationContext)at Microsoft.WindowsAzure.Storage.Table.CloudTable.Exists(Boolean primaryOnly, TableRequestOptions requestOptions, OperationContext operationContext) at Microsoft.WindowsAzure.Storage.Table.CloudTable.CreateIfNotExists(TableRequestOptions requestOptions, OperationContext operationContext) at Microsoft.DynamicsOnline.Infrastructure.Components.TableAccessor.TableStorageAccessor.PerformOperation(CloudStorageAccount storageAccount, String tableName, Func`1 operation) at Microsoft.DynamicsOnline.Infrastructure.Components.TableAccessor.TableStorageAccessor.AddRecord[T](CloudStorageAccount storageAccount, String tableName, T record) at

Reason:

This issue is coming because Azure emulator is not running.

Resolution:

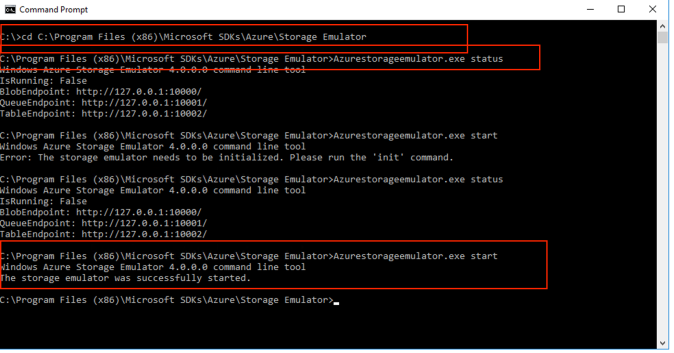

Issue can be checked and resolved using below commands.